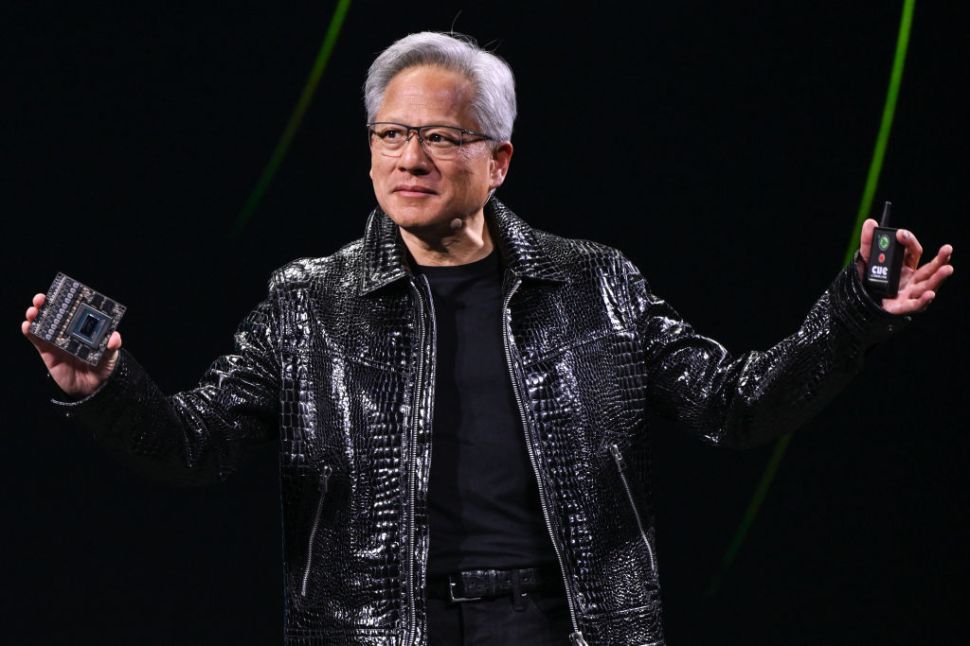

Earlier this week, an obscure Chinese A.I. startup named DeepSeek shook Silicon Valley after it claimed that it had built a highly capable A.I. model called R1 for a fraction of the cost of its American rivals like OpenAI’s GPT and Google’s Gemini. Major A.I. stocks plummeted in response to the report. Nvidia, which makes expensive chips (GPUs) that power the development of such A.I. models, saw its shares slide more than 16 percent on Monday (Jan. 27), erasing nearly $500 billion in its market cap.

Despite the negative financial impact, Nvidia praised DeepSeek’s breakthrough. “DeepSeek is an excellent A.I. advancement and a perfect example of test time scaling,” a company spokesperson told Observer in a statement. “DeepSeek’s work illustrates how new models can be created using that technique, leveraging widely available models and compute that is fully export control compliant.”

Test-time scaling is a new real-time prediction technique that adjusts an A.I. model’s computational requirements based on the complexity of a task during real-time use. Before that, there were two dominant approaches to scaling an A.I. model: pre-training and post-training. Pre-training scaling expands an A.I. model’s dataset and computing power during initial training while post-training scaling helps fine-tune the model and enhance its real-world performance.

According to its research paper, DeepSeek’s R1 model was trained on 2,048 Nvidia H800 chips for a total cost of under $6 million. Some companies and A.I. insiders are skeptical about this claim. For example, Alexandr Wang, the founder and CEO of Scale AI, suspects that DeepSeek may have more Nvidia chips than it’s letting on.

Despite leveraging scaling laws, A.I. models often demand significant GPU power during output generation. To counter this, Deepseek said it developed R1 as a “distilled A.I.” model—a smaller model trained to replicate the behavior of larger A.I. systems. Distilled models consume less computational power and memory and offer greater accuracy in tasks such as reasoning and coding, making them an efficient solution for resource-constrained devices such as smartphones. Microsoft and OpenAI are currently investigating whether DeepSeek stole OpenAI’s proprietary data through GPT APIs using “distillation” techniques to build R1’s architecture.

“It’s important to note that the $6 million figure for R1 doesn’t account for the prior resources invested,” Itamar Friedman, the former director of machine vision at Alibaba, told Observer. “DeepSeek’s relatively low training cost figure likely represents just the final training step.” Friedman explained that while scaling and optimization are valuable, there are limits to how small an A.I. model or training process can be while still effectively simulating thinking and learning. “Large, high-cost systems will still have a major advantage,” he said.

DeepSeek, headquartered in Hangzhou, was founded in 2023 as a spinoff from the hedge fund High-Flyer, led by Liang Wenfeng.